|

| Taken from IEBlog |

|

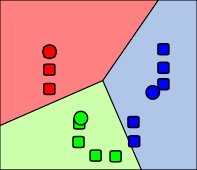

| Regions that determine direction of tilt. |

The center region simply causes the tile to be depressed as an HTML button or other similar icon. The first step towards emulating this behavior is to determine the direction of the tilt. Once the direction is chose, we must apply the correct CSS3 3D transform to the tile.

Let's first define our points of interest relative to our object, which will be defined by its top-left coordinate (left, top) with width width and height height.

1. Center defined by rectangle:

(left+(width/2)-(.1*left), top+(height/2)-(.1*top)) width .2*width height .2*height.

2. Descending line defined by point (left, top) and slope (-height / width):

y - top = (-height / width) * (x - left)

3. Ascending line defined by point (left, top+height) and slope (heigh,width):

y - (top + height) = (height / width) * (x - left)

Now that we've defined our points of interest, we can take the incoming click and determine which direction the tilt is. The below code will install an event handler for the mouse down event for ever element with class "tilt".

$(".tilt").each(function () {

$(this).mousedown(function (event) {

// Does the click reside in the center of the object

if (event.pageX > $(this).offset().left + ($(this).outerWidth() / 2) - (0.1 * $(this).outerWidth()) &&

event.pageX < $(this).offset().left + ($(this).outerWidth() / 2) + (0.1 * $(this).outerWidth()) &&

event.pageY > $(this).offset().top + ($(this).outerHeight() / 2) - (0.1 * $(this).outerHeight()) &&

event.pageY < $(this).offset().top + ($(this).outerHeight() / 2) + (0.1 * $(this).outerHeight())) {

$(this).css("transform", "perspective(500px) translateZ(-15px)");

} else {

var slope = $(this).outerHeight() / $(this).outerWidth(),

descendingY = (slope * (event.pageX - $(this).offset().left)) + $(this).offset().top,

ascendingY = (-slope * (event.pageX - $(this).offset().left)) + $(this).offset().top + $(this).outerHeight();

if (event.pageY < descendingY) {

if (event.pageY < ascendingY) {

// top region

$(this).css("transform", "perspective(500px) rotateX(8deg)");

} else {

// right region

$(this).css("transform", "perspective(500px) rotateY(8deg)");

}

} else {

if (event.pageY > ascendingY) {

// bottom region

$(this).css("transform", "perspective(500px) rotateX(-8deg)");

} else {

// left region

$(this).css("transform", "perspective(500px) rotateY(-8deg)");

}

}

}

});

$(this).mouseup(function (event) {

$(this).css("transform", "");

});

});

Here is a below working example. Click the image to see the tilt effect:

Let me know if you find any particular issues with any browsers or have any comments below. I also should plug the useful tools used to help with this code:

- Tool to see visually what different CSS3 3D transformation do: http://ie.microsoft.com/testdrive/Graphics/hands-on-css3/hands-on_3d-transforms.htm

- IE blog talking about new support for CSS3: http://blogs.msdn.com/b/ie/archive/2012/02/02/css3-3d-transforms-in-ie10.aspx