In line with my previous post, I would like to take a look at using a technique called Nonnegative matrix factorization for creating an unsupervised learning classifier. The idea is given a matrix with no negative entries, factor the matrix into two other matrices such that their product is approximately equal to the original matrix. Assuming such a factorization exists, we can extract information from the resulting matrices that help describe the dependencies of the entries in the original matrix. By knowing the dependencies, we can see what elements are related to each other, and group them accordingly. Let's do an example by hand first with A, a 4x2 matrix :

A = [1 1;

2 1;

4 3;

5 4];

Suppose we discover two matrices W and H such that W*H approximately equal to A:

W = [0.29291 1.85124;

1.94600 0.26850;

2.53183 3.97099;

2.82474 5.82223];

H = [0.97449 0.44915;

0.38599 0.46911];

W*H = [1.0000 1.0000;

2.0000 1.0000;

4.0000 3.0000;

5.0000 4.0000];

What do the values in W and H say about the data in A? Let's examine just row 3 in W*H:

2.53183 * 0.97449 + 3.97099 * 0.38599 % = 4.0000 2.53183 * 0.44915 + 3.97099 * 0.46911 % = 3.0000

We notice that 3.97099 from row 3 of W is greater than 2.53183 of the same row. This means that row 3 in the result of W*H will be more heavily influenced by the second row of H ([0.83599 0.46911]). By looking at which column is greater in W, we can divide the data into two groups, one group more influenced by the first row of H (row 2 of A), and another group more influenced on the second row of H (rows 1, 3, & 4 of A). Using this logic, we can treat H as a sort of basis for the entries in W. Using this understanding of the matrices W and H, we can say that W defines which group an entry in A belongs where H defines the basis of the entire group. By controlling the dimensions of W and H, we can chose how many groups we want the factorization to create.

There has been much research into algorithms that actually can find the nonnegative matrix factorization of a matrix, but I will show just one of the most basic and earliest ones originating with Lee and Seung. Essentially the algorithm begins with an initial guess of W and H and then iteratively updates them using a rule proven not to increase the error of the approximation of A. Here is an Octave/Matlab implementation:

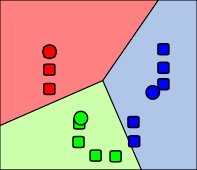

function [W, H] = nnmf(A, k) %nnmf factorizes matrix A into to matrices W, H such that W*H ~= A % A must be non negative % k is used to define the dimensions of W and H m = size(A, 1); % # of rows in A n = size(A, 2); % # of cols in A W = rand(m, k); H = rand(k, n); for i = 1:1500 % update rule proven to not increase the % error of the approximation % when cost = (1/2) * sum(sum((A - W*H) .^ 2)); W = W .* (A * H') ./ (W*H*H' + 10^-9); H = H .* (W' * A) ./ (W'*W*H + 10^-9); end endUsing this algorithm, we can now create a classifier based on nonnegative matrix factorization. We start with a set of points and then decide how many groups we want to classify the data into. When then supply this information into the algorithm to get a factorization.

X = [1 1;

2 1;

4 3;

5 4];

[W H] = nnmf(X, 2)

W =

1.12234 0.19811

0.31776 1.80444

2.56244 2.20065

3.68479 2.39876

H =

0.71766 0.81862

0.98200 0.41003

Now to better see the classification, we can create a matrix Wn, such that the 1 entry in each row is the maximum column from W for that row. This will indicate which group a data entry belongs.

Wn = 1 0 0 1 1 0 1 0

So given the original 4 data points, we have classified them into two groups. Similarly to k-means clustering, this algorithm can be used to group extremely complex groups of data, such as web results, documents, spam, etc. Please comment if I can explain more clearly or missed any crucial information.